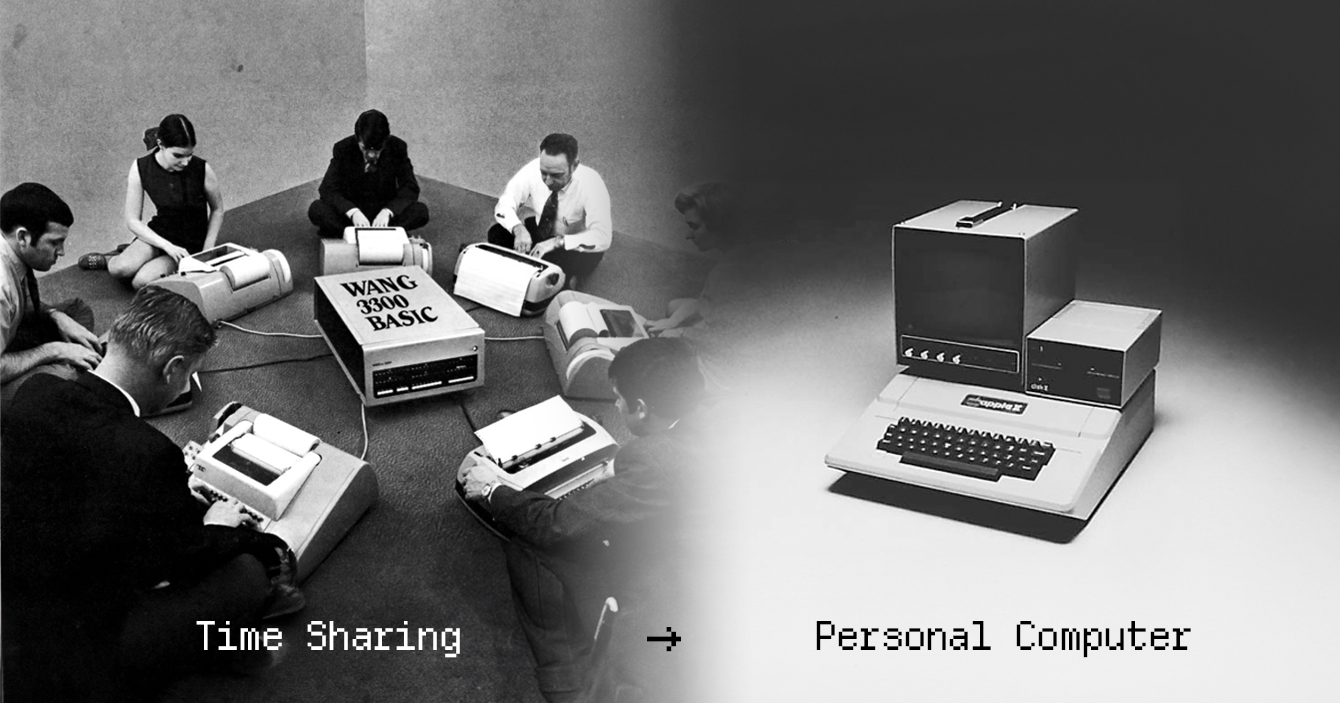

The year was 1960, the era of mainframes. Computing was extremely expensive, so it was centralized and time-shared among multiple terminals. No user had exclusive use of the computer. Over time, the demand for personal computers grew. Businesses, scholars, and hobbyists wanted direct, individual access to computing. This led to the rise of personal computers like the Apple II and IBM PC in the late 1970s and early 1980s.

Today, we face a similar situation with machine learning. Inference for large language models remains expensive, so it is centralized on powerful servers and shared among users through APIs. As more of our social and professional lives shift online, data has become the new currency. Privacy and security have become more important than ever, driving steady growth in the demand for local inference. With NPUs starting to appear in mainstream consumer PCs (AI PCs) in late 2023, we can expect local inference capabilities to improve significantly in the near future.

Modern high-end computers now come with foundation models built in, and both macOS and Windows provide APIs to access these models locally. However, these APIs are limited to native applications. For web applications, the models can by locally inferred in few ways.

On-Demand Model Loading

Today, we can run state-of-the-art machine learning models directly in the browser, thanks to libraries like Transformers.js and TensorFlow. I have played around mostly with Transformers.js, which uses ONNX Runtime for inference, and depending on the system’s hardware capabilities, models can run in any of the following ways:

- On the CPU using WebAssembly

- On the GPU using WebGPU

- Or on CPU, GPU, and NPU via the new WebNN API

The very first time user visits the site, the model is downloaded and cached, and so on subsequent visits there is no need to download it again. It can even work offline.

At first, I was quite skeptical about running ML models in the browser due to strict memory limits. But then I came across these lightweight tiny models capable of delivering high-quality results. For instance, kitten-tts-nano is under 25MB, generates realistic text-to-speech output, and runs entirely on the CPU without requiring GPU acceleration. Also in the case of small or medium-sized models we can make use of their quantized version for lower memory footprint and better performance.

I took a quantized version of Facebook’s musicgen-small model and built a web app that could generate music directly from the browser.

Here’s the demo.

Browser-Embedded Models

The approach of loading models on demand sounds practical at first, but consider what happens when AI adoption becomes widespread. Every website we visit may download its own model, leading to storage issues on the user’s device. From a developer’s perspective, this also creates high serving costs, as each user needs to download the model separately.

To address this, browser vendors are developing web platform APIs and features specifically designed to work with AI models directly in the browser. The idea is to embed the commonly used models into the browser itself, allowing them to be shared across websites, reducing storage overhead for users and lowering the delivery cost for developers.

The Chrome Built-in AI team is pioneering these platform APIs. As of writing this article, these APIs are available only in Chrome 138+ and in Opera 122+. The W3C web machine learning group has drafted the specification, hopefully it will eventually supported by all the browsers.

I have built a playground, where you can experiment with all the Chrome’s built-in AI APIs.

Here’s the demo.

Leveraging these APIs, I’ve also built three extensions for Zoho Desk: Translator, Summarizer, and an AI assistant.

Here’s the demo.

These are the approaches we currently have for running ML models locally in web applications. In the future, there may even be a web standard for accessing local models on computers, similar to the Local Font Access and File system APIs. Exciting times ahead!